AI Act

The EU Commission developed its AI Act, the first-ever legal framework on AI, which addresses the risks of AI and positions Europe to play a leading role globally.

The AI Act provides AI developers and deployers with clear requirements and obligations regarding specific uses of AI. At the same time, the regulation seeks to reduce administrative and financial burdens for business, in particular small and medium-sized enterprises (SMEs).

The AI Act is part of a wider package of policy measures to support the development of trustworthy AI, which also includes the AI Innovation Package and the Coordinated Plan on AI. Together, these measures guarantee the safety and fundamental rights of people and businesses when it comes to AI. They also strengthen uptake, investment and innovation in AI across the EU.

The AI Act is part of a wider package of policy measures to support the development of trustworthy AI, which also includes the AI Innovation Package and the Coordinated Plan on AI. Together, these measures guarantee the safety and fundamental rights of people and businesses when it comes to AI. They also strengthen uptake, investment and innovation in AI across the EU.

The AI Act is the first-ever comprehensive legal framework on AI worldwide. The aim of the new rules is to foster trustworthy AI in Europe and beyond, by ensuring that AI systems respect fundamental rights, safety, and ethical principles and by addressing risks of very powerful and impactful AI models.

Why do we need rules on AI?

The AI Act ensures that Europeans can trust what AI has to offer. While most AI systems pose limited to no risk and can contribute to solving many societal challenges, certain AI systems create risks that we must address to avoid undesirable outcomes.

For example, it is often not possible to find out why an AI system has made a decision or prediction and taken a particular action. So, it may become difficult to assess whether someone has been unfairly disadvantaged, such as in a hiring decision or in an application for a public benefit scheme.

Although existing legislation provides some protection, it is insufficient to address the specific challenges AI systems may bring.

The new rules:

- address risks specifically created by AI applications

- prohibit AI practices that pose unacceptable risks

- determine a list of high-risk applications

- set clear requirements for AI systems for high-risk applications

- define specific obligations deployers and providers of high-risk AI applications

- require a conformity assessment before a given AI system is put into service or placed on the market

- put enforcement in place after a given AI system is placed into the market

- establish a governance structure at European and national level

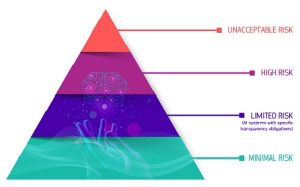

A risk-based approach

The Regulatory Framework defines 4 levels of risk for AI systems:

More info and details on this critical doc can be found here.